6x USB Flash Drive Raid

SanDisk Cruzer Titanium x6

Can a software raid array of 6 usb flash drives perform well? Is it silly to even try? Well theres one way to find out.

Flash memory as a main storage medium is a relatively new phenomenon. Flash is known for its lack of seek time, so we wanted to see just how much bandwidth we could squeeze out of these devices over the USB bus.

It turns out that even with all of the limitations of the USB bus working against us we were still able to obtain some very good results at a very nice pricepoint.

The USB Bus

The usb bus is very convenient for peripherals. However, it was not designed for high bandwidth IO. USB 2.0 Hi-Speed mode is 480 megabits per second, which translates into 60 megabytes per second. A single host controller can have up to 127 devices connected, although each device has to split the 480 megabit bandwidth. USB protocol does not have any client interrupts so the host controller must poll each device at regular intervals. Furthermore the packet size of the bus is small so there is significant bandwidth eaten by packet overhead.

Hardware

- Flash Drives: 6x Sandisk Cruzer Titanium 2G. (12 GB in total). These had an approximate street value of about $20 each when purchased. Besides being relatively fast they also look sleek.

- USB Controllers: 2x Intel 82801I (ICH9 Family) USB controllers. These were the controllers accompanying standard USB ports on the motherboard. These are ordinary and compliant devices.

Software

- U3 Removal Tool (Requires Windows) Stops the Flash device from trying to create a fake auto-run cdrom device when plugged in.

- Linux: Kernel v2.6.24.4 x86_64

- Raid tool: mdadm v2.6.4

- Raw read tool: dd

- Filesystem benchmark: Bonnie++

Except for the U3 removal, these tools were used in a System Rescue CD livecd environment.

##Remove U3 software for Windows

The first thing we did was plug in a single Cruzer to test its speed. Out of curiosity we checked dmesg to see what it shows:

[ 316.394543] usb 2-7: new high speed USB device using ehci_hcd and address 5 [ 316.455304] usb 2-7: configuration #1 chosen from 1 choice [ 316.456930] scsi7 : SCSI emulation for USB Mass Storage devices [ 316.460152] usb-storage: device found at 5 [ 316.460157] usb-storage: waiting for device to settle before scanning [ 318.730555] usb-storage: device scan complete [ 318.731462] scsi 7:0:0:0: Direct-Access SanDisk U3 Titanium 3.27 PQ: 0 ANSI: 2 [ 318.732368] scsi 7:0:0:1: CD-ROM SanDisk U3 Titanium 3.27 PQ: 0 ANSI: 2 [ 318.748159] sd 7:0:0:0: [sdb] 4001422 512-byte hardware sectors (2049 MB) [ 318.749558] sd 7:0:0:0: [sdb] Write Protect is off [ 318.749563] sd 7:0:0:0: [sdb] Mode Sense: 03 00 00 00 [ 318.749567] sd 7:0:0:0: [sdb] Assuming drive cache: write through [ 318.754611] sd 7:0:0:0: [sdb] 4001422 512-byte hardware sectors (2049 MB) [ 318.755914] sd 7:0:0:0: [sdb] Write Protect is off [ 318.755919] sd 7:0:0:0: [sdb] Mode Sense: 03 00 00 00 [ 318.755922] sd 7:0:0:0: [sdb] Assuming drive cache: write through [ 318.755927] sdb: sdb1 [ 318.757017] sd 7:0:0:0: [sdb] Attached SCSI removable disk [ 318.757051] sd 7:0:0:0: Attached scsi generic sg2 type 0 [ 318.765002] sr1: scsi3-mmc drive: 8x/40x writer xa/form2 cdda tray [ 318.765052] sr 7:0:0:1: Attached scsi CD-ROM sr1 [ 318.765085] sr 7:0:0:1: Attached scsi generic sg3 type 5 [ 319.357396] cdrom: This disc doesn't have any tracks I recognize!

Well that was odd, we didnt notice any cdroms attached to our flash drives.

It turns out that this artificial cdrom is how the drive normally installs its U3 software in Windows via autorun. Googling showed us that we could remove the U3 software from within Windows. So we booted Windows, downloaded the U3 removal tool and removed U3 from all the devices. This isn’t technically necessary because the drives will still work in Linux with the fake cdroms ignored, but it made dmesg cleaner. And cleanliness has some relation to godliness, or so its been said.

A Single Device Benchmark

As for initial benchmarks on a single stick, Sandisk claims 9mb/s write and 20mb/s read. Using dd on the raw /dev/sdX devices showed that the devices write at speeds between 7-9MB/s and read between 18-20MB/s. Interestingly writing to a device changed its read rate, this is perhaps due to internal wear leveling. Overall, each of the flash drives performed almost identically.

Raid0 on a Single USB Bus

As we mentioned earlier, a USB bus has a bandwidth of at most 480 megabits per second. It would be useful to known how many devices you can fit on a single USB bus and still be able to improve the amount of bandwidth that can be squeezed out of a single host controller.

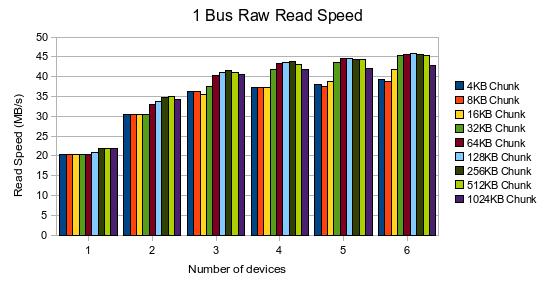

So we plugged in devices and started running dd. This is what we found:

After three devices the read speed was almost completely topped out. Adding more devices had only a marginal gain. This makes sense since three 20mb/sec devices could be able to saturate a 60mb/sec bus. The chunk size of the raid array did have some influence on the speed of the raid. It seems that even though seek times are close to zero there is initialization and bus contention to deal with, this causes the optimal chunk size to be somewhere in the 32k-512k range.

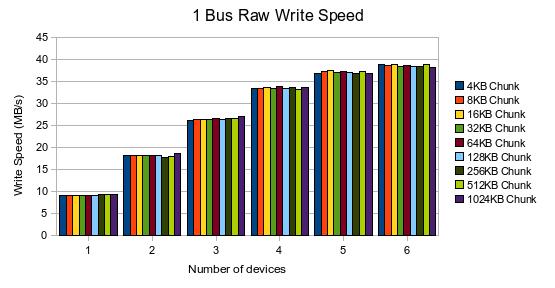

But what about write speeds? Our device only writes at 9mb/sec tops, so theoretically we should see gains with up to 6 or even 7 devices. But what about in practice?

At first there are linear gains when adding devices. However, by the time we added the fourth device we started to see some bus limitation penalties. It does seem that chunk size has very little impact on the speed of write operations.

Overall the read speeds top out at about 45mb/sec while the write speeds max at 38mb/sec.

Raid0 on a Pair of USB Buses

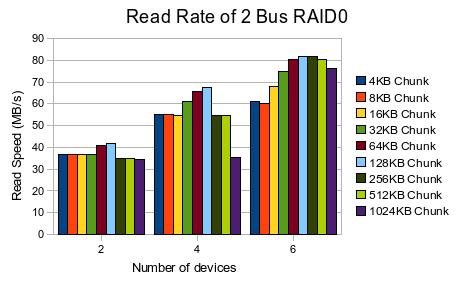

But wait a second, this motherboard has two buses. We plugged in half of the devices to the first host controller and half into the second host controller. When creating the raid, you have to choose the order the raid uses the devices. Tests showed that interleaving the device-bus order had no effect over non-interleaved device-bus order. We chose to interleave them anyway.

With 6 devices we are reaching 80mb/sec. It appears the optimal chunk actually depends on the number of devices used.

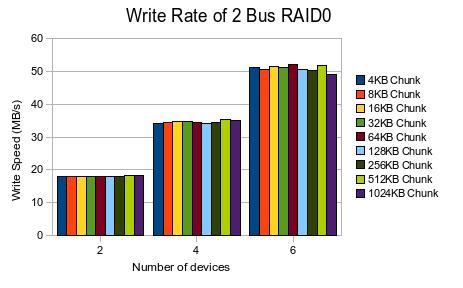

Lets take a look at writing speeds

As we saw earlier on the single bus, when there are very few devices on the bus the performance gain by adding an additional device is nearly linear. This translates into the raw speeds from dd showing a very decent read rate of 80mb/sec and write of 50mb/sec.

Filesystem tests

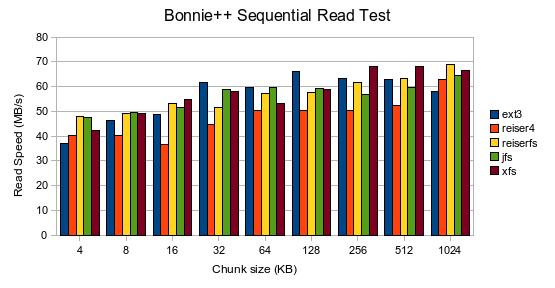

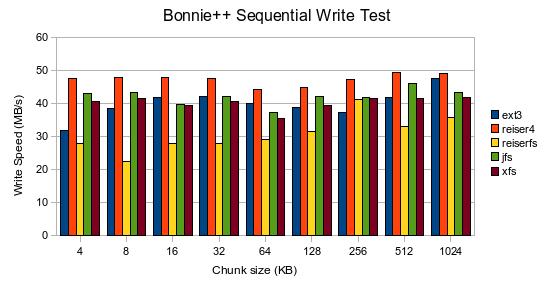

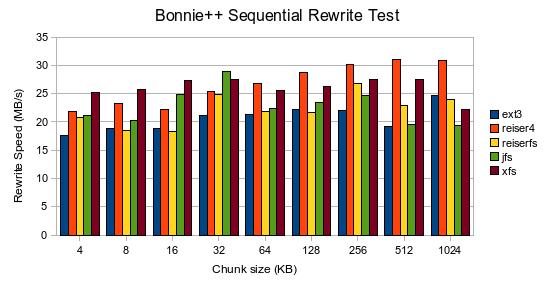

Our last test was to run through our most optimal setup, the 2-bus 6-device configuration and try different filesystems with Bonnie++.

An interesting note is that Reiser4 shined in the benchmarks. However, it performed very poorly when used as the root and home partition of a desktop system.

On our desktop system we tried reiser4 with lzo compression and reiser4 with default settings. In general performance was extremely good, especially with the lzo compression. The problem we encountered were very long pauses when writing to files. For instance, saving in emacs had a significant delay at times, as much as half a second at times. Other programs, such as pidgin would cause the system to chop every time you move a window around the screen (was it writing to configuration files?). It might have something to do with synchronous IO calls because not every program did it. In retrospect, it would have been smart to also record and evaluate seek time and small file tests on each filesystems because the chunk size might have had an adverse affect on such operations.

The only other filesystem tested on a live desktop system was ext3 and it performed beautifully. Applications with traditionally long loading times (GIMP, OpenOffice, etc) start considerably faster than on a traditional hard drive.

Conclusions

The performance of the RAID is actually remarkably good. Compared to buying a standard SSD this RAID beats all available drives on a price per performance ratio. Based on prices of popular online stores we can see that this solution costs less than half the price of most current SSDs and has the same read and write speeds as the higher end SSDs.

Although we are left with one big unknown. Flash is known to eventually fail after a certain number of writes, will our RAID fail quickly? USB flash sticks aren’t renowned for their reliability.

Based on the feel of the system, we have chosen to use this RAID as part of a production system (we will keep Bacula running just to be safe). Day 1 of the flash-raid-as-root starts today.

If you are interested in building your own flash raid, you might want to check out Howto: Boot Linux with a USB Flash Drive Raid as the RootFS.